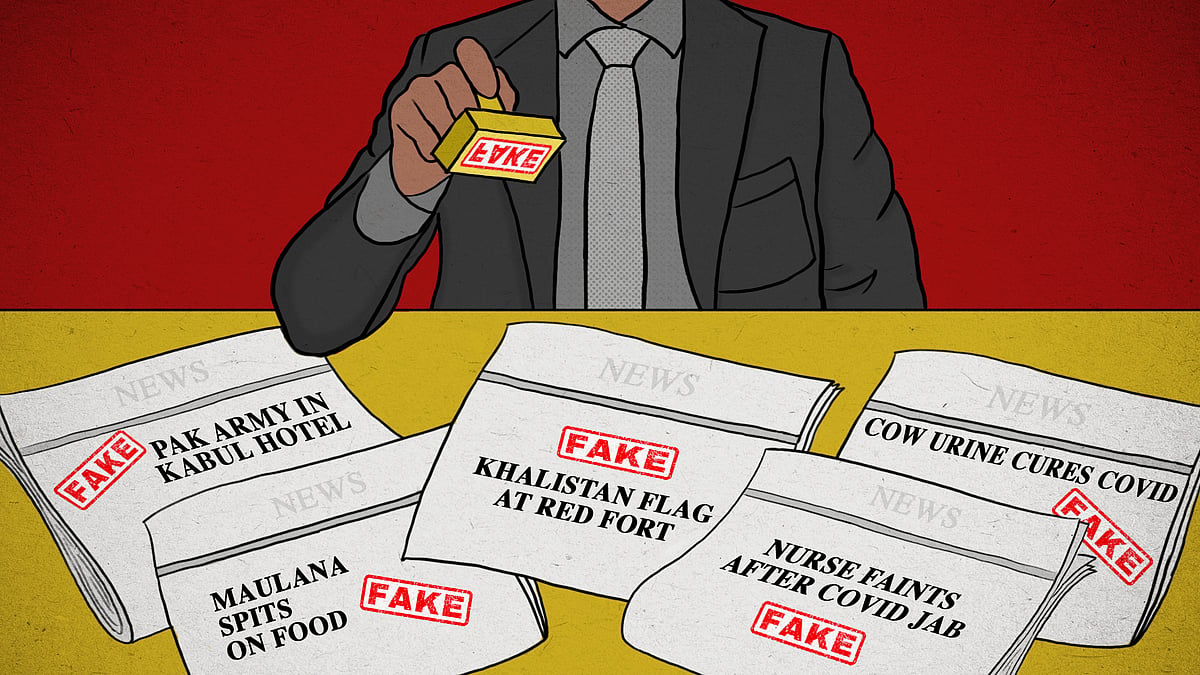

From Nigeria to India, Big Tech does little or nothing to curb fake news

Social media platforms often ignore misinformation in regional languages, even though it has real-world consequences.

India-based fact-checker Bharat Nayak monitors over 176 Hindi language WhatsApp groups for a research project he is working on to understand news consumption among Indians.

Until a few months ago he worked as founding editor at the Indian digital news and fact-checking site The Logical Indian, overseeing production of fact-checks in the form of text articles, videos and social media posts.

Often, he would take it upon himself to debunk viral news, effortlessly adopting a mix of Hindi and English in an accent familiar to states across central and north India.

Now, as an independent fact-checker, Nayak dabbles in research, monitoring the media landscape as well as projects that focus on improving media literacy among the public.

“I see that [in] almost none of the places [WhatsApp groups] anyone talks about fact-checking,” says Nayak, who is also a trainer with the Google News Initiative.

“Or if there is any misinformation being spread, no one replies with the fact-check.”

Nayak says that he and other fact checkers have noticed that accurate news published in several Indian languages is often flagged by Facebook as misinformation, while the fact-checks themselves have sometimes been labelled as misinformation.

To Nayak and other digital media experts interviewed by SciDev.Net, it is clear that little attention is paid by social media platforms to false news in non-European languages.

In the absence of any data, the true scale of misinformation in lesser-spoken languages is unknown, says Nigerian journalist Hannah Ajakaiye, an International Center For Journalists Knight Fellow at FactsMatterNG, an initiative to promote information integrity and public media literacy in Nigeria.

She believes there is a strong connection between language and acceptance. “It makes [misinformation in lesser-spoken languages] more dangerous,” she says, explaining that people are more likely to believe what they are reading is true.

Fact-checking organisations like these often work round the clock, tracking false information linked to trending topics, to counter misinformation and disinformation.

Watchdog organisations have consistently demanded a misinformation crackdown on platforms such as Facebook, TikTok, YouTube, X (formerly Twitter), and messaging apps like WhatsApp. All these big tech platforms have different policies to monitor, regulate and reduce misinformation.

Covid-19 infodemic

The pressure on big tech to tackle false news comes and goes in cycles, say observers. During the Covid-19 outbreak, there was more pressure to stop fake news about the virus and vaccines, from the medical community and others.

In 2021, Facebook said it had removed 18 million pieces of misinformation about COVID-19 from Facebook and Instagram, although the number of items in lesser-known languages is likely to be a fraction of this.

During the infodemic that accompanied the global health crisis, there was no shortage of fake content to root out.

Onyinyechi Micheal, 17, remembers her foster mother frantically rushing into the room one night in February 2021, just after the first Covid-19 case was detected in Nigeria. She had received an audio message on a Facebook group which advised that consumption of an onion and ginger broth would prevent Covid-19 infection.

The “health tip”, in Igbo language, was shared on an all-Igbo group. Micheal never contracted the virus but has no idea whether that was down to the broth, or any of the subsequent concoctions she took to ward off the virus.

While drinking onion and ginger soup might not have done Michael any harm, as Covid-19 deaths increased, so did the bogus medical recommendations on social media platforms, which became pivotal for connectivity and information dissemination during the pandemic.

Millions of text articles, videos and audio clips – mostly about health – were churned out globally in thousands of languages. In the absence of a cure or access to a vaccine, netizens relied on viral health tips from within their communities, speaking their language.

Hundreds of languages

Battling this cycle of false information in hundreds of languages across different geographies is proving to be hard.

Facebook, now Meta Platforms Inc., has roughly 3 billion active users. In 2016, Meta initiated a third-party fact-check partnership of up to 90 independent fact-checking organisations certified through the International Fact-Checking Network (IFCN). While it was hailed as instrumental for its efforts in debunking fake news, Western language content seems to have taken centre stage, digital media specialists say.

Peter Cunliffe-Jones, advisory board member of the IFCN, acknowledges that big techs have made progress in combating misinformation, but says they have not done a good job of countering the spread of harmful information generally and especially in regional, local, or lesser-known languages.

“It is true that some platforms – Meta platforms among them – have done more recently to increase the number of languages in which they respond to misinformation,” he told SciDev.Net by email.

However, he cautioned that this was not enough.

“They all need to do a lot more,” he said, adding: “Ultimately, the effects of misinformation will be felt by everyone, everywhere.”

YouTube says it has clear guidelines on misinformation and routinely takes down anything that violates these guidelines.

‘Haven for misinformants’

The IFCN’s 2022 State of the Fact Checkers report notes that Meta’s Third Party Fact-Checking programme is the leading source of funding for fact-checking organisations, contributing 45.2 per cent of their income. However, neither Meta’s site nor the report mentions how this funding was spread across different languages.

In a months-long investigation, SciDev.Net looked at how third-party fact-checking differs in local and regional languages in Nigeria and India when compared to the English language.

Meta relies on two ways to tackle false information: artificial intelligence and a network of trusted partners, independent third-party fact-checking organisations and its users.

Niam Yaraghi, a senior fellow at the Brookings Institution, a US-based think-tank, noted in a commentary that Facebook relies on its community of users to flag false information and then “uses an army of real humans to monitor such content within 24 hours to determine if they are actually in violation of its terms of use”.

Yaraghi says live content is only monitored by humans “once it reaches a certain level of popularity”.

However, things become complicated when different languages are in the mix. Nigeria and India together have more than 1.6 billion people, speaking thousands of languages and dialects.

Social media platforms thrive in dozens of languages in these countries, yet the attention paid to them by the platforms – for fact-checking or resource allocation – is skewed when compared to countries in the global North.

Onyinyechi does not know the exact group her foster parents got that info about the broth from, but even now, similar community groups exist, pushing misleading narratives of this sort.

“Local languages are a haven for misinformants. Without much attention, they are used to bypass scrutiny,” says Allwell Okpi, researcher and community manager at Africa Check, a Meta Platforms Inc. third-party fact-checking news platform in Nigeria.

Where is the cash?

Regulation of content varies widely, our investigation found.

Meta’s website notes that “WhatsApp invested US$1 million to support IFCN’s Covid-19 efforts, including the CoronaVirusFacts Alliance, in which nearly 100 fact-checking organisations in more than 70 countries produced more than 11,000 fact-checks about the Covid-19 pandemic in 40 languages”.

WhatsApp, which is owned by Meta, also launched a US$500,000 grant programme to support seven fact-checking organisations’ projects fighting vaccine misinformation, in partnership with the IFCN, Meta’s statement notes.

“Meta, Google and others work on fake news a lot when there is a buzz around it and they are being questioned for the same,” claims Nayak.

“Once the buzz around it gets silent, then their attention towards it starts reducing.” This is particularly visible in developing countries like India, he says.

The lack of action on tackling false information is linked to poor investment and shortage of resources, says Rafiq Copeland, senior advisor for platform accountability at Internews, a media non-profit.

Global south countries are “certainly the largest markets in terms of users. But the advertising revenue that the companies receive per user is much higher in the US and Europe,” says Copeland.

While a majority of users on platforms like Facebook are in Asia, Africa, the Middle East, or Latin America, he says “they account for around half or less than half the total revenue that the company receives”. This means the comparative investment that happens per language varies, he explains.

In 2021, most of Meta’s revenue was from the US (US$48.4 billion), followed by the European region, including Russia and Turkey (US$29 billion). The Asia-Pacific region generated US$26.7 billion, and African, Latin American and Middle Eastern companies made just US$10.6 billion.

Over 90 per cent of Meta’s revenue in the second quarter of 2023 was generated from ads – US$31.5 billion profit of a US$32 billion total revenue.

Who are the watchdogs?

India is the world’s top source of social media misinformation on Covid-19, according to a study by researchers at Jahangirnagar University, Bangladesh, published in 2021. With over 496 million people in the country on Meta platforms as of September 2023, there are only 11 third party fact-checking partners in India together tackling 16 languages, including English. The country has the highest number of Meta third-party fact-checkers.

Meanwhile, Nigeria, Africa’s most populous country, with a population of over 200 million people, has more than 500 indigenous languages and 31.60 million social media users – a majority of whom are active on Meta platforms. It was ranked 23rd on the social media misinformation list on COVID-19.

Here, Meta has just three third-party fact checkers – Dubawa, Africa Check and Associated Press News – tasked with the watchdog duties of debunking and verifying misinformation in four languages, including English.

Producing fact-checks in local or lesser-spoken languages is often an in-house initiative of third-party partners. Meta, for example, currently has partners in 118 countries covering 90 languages.

Fact-check limitations

According to Okpi and other fact-checkers questioned by SciDev.Net, third-party fact-checking isn’t yet efficient in some languages. While a piece of misinformation in English might go viral, its fact check in a lesser-spoken language may not gain the same reach, for example.

“A lot of what we do currently is we do the initial fact check in English and then we translate to Hausa or Yoruba to ensure that the language’s audience can access it,” says Kemi Busari, editor at Nigerian fact-checking organisation Dubawa.

“The intention is to go beyond that.

“We want to do it in Igbo, Kanuri and Krio. We want to do three in Ghana and a couple of others before the end of this year.”

SciDev.Net found that Meta pays at least US$300-$500 per fact check.

But fact-checking hyper-local and user-generated content, in lesser-spoken languages, is a bit more challenging and often requires more resources to get to the truth, says Bharath Guniganti, a fact-checker at Hyderabad-based Factly, a Meta third-party partner and bilingual platform offering coverage in Telugu and English.

Fact-checking mainstream claims, in English or dominant languages, can usually be done easily because there is a digital trail. However, content produced by users locally is harder to verify as it may need on-ground resources, he says.

“We need more newsrooms as part of the initiative, we need more focus, we need more [fact-checkers’] voices in the communities because information that we are privy to shifts in different ways,” adds Busari.

‘Safety at stake’

Copeland of Internews says platforms have to invest in language equality. And even this needs to be backed up by “transparency and accountability to show that investments have been made,” he believes.

Internews reviewed Meta’s Trusted Partner Program, another initiative to keep Meta’s products safe and protect users from harm. This comprises organisations and users who alert the company to dangerous content, harmful trends, and other online risks and even query decisions of Facebook and Instagram on behalf of at-risk users globally.

In its 2023 report, Safety At Stake: How to save Meta’s Trusted Partner Program, Internews found several red flags, including understaffing, lack of resources, erratic and delayed responses to urgent threats raised by the partners, and disparity of services based on geography.

“Whilst Ukrainian partners can expect a response within 72 hours, in Ethiopia equivalent reports relating to the Tigray War can go unanswered for several months,” the report states.

Internews and Localization Labs released an analysis of Google and Meta’s community policies in four different non-Western languages in January 2023. They found that policies on both platforms contain “systemic errors, and English-language references were not applicable and lacked human review for usability and comprehension.” The analysis called on Meta and Google “to improve efforts to effectively communicate with the growing number of users in non-Anglophone countries”.

Both reports recommend better resourcing, transparency and accountability.

‘Real world harm’

Senior fact-checkers at BoomLive, one of the third-party fact-checkers working in Hindi, Bangla and English, noted in an email interview that misinformation travels faster in local or regional languages than in English. “According to misinformation trends, a false claim associated with a video or image or audio generates mostly in Hindi and then snowballs into other languages,” they said.

Additionally, the topics of misinformation often reflect what is trending in the media, explained Guniganti of Factly. He added that most often it is dominated by political news or events, however Covid-19 was an outlier.

According to Meta, once an article is checked untrue, the platform doesn’t remove it but reduces the visibility of the content and informs the audience to limit the ubiquity of such misinformation.

Social media companies have adopted two approaches to fight misinformation, analysts say. The first is to block such content outright, while the second is to provide correct information alongside the content with fake information.

Guniganti says: “Platforms take down only misinformation content that violates their community guideline of causing harm as such, but not content that gives false information as it’s not a violation of the community guidelines.”

Busari agrees. “So they flag false information to you [the third-party partners] on Facebook or Instagram. And once you are able to do some fact-checks, you can escalate it to Facebook and they take action,” he says.

Sometimes the platforms reduce the spread of such false information, and sometimes the content is removed entirely.

When content is not deemed harmful or in violation of community guidelines, it is not removed by the platform. However, here, third-party fact-checkers rate the content for false information and Facebook provides alternative information alongside the fake content.

Nayak questions this notion of harm. He says individual pieces of content may not be harmful or violate community guidelines, but they accumulate to cause real-world harm to society. He gives the example of how Islamophobic content thrived during the pandemic, resulting in actual attacks on Muslims in India.

“There was a video of a man licking vegetables and fruits and that was linked to spreading coronavirus,” he recalls. While the video was unrelated to the pandemic, it went viral on social media platforms, including messaging platforms like WhatsApp.

People would say “don’t buy anything from Muslims or eat anything they offer”, says Nayak.

Nayak produced a fact-check in Hinglish – a combination of Hindi and English – which went viral. But the damage was done.

“Even if you bust the fake news, the biases it creates is going to remain with you forever,” he says. “That helped the anti-Muslim narrative to thrive.”

“In India, Covid was unique in a way that Muslims were held responsible for Covid spread,” says Nayak. He believes that Covid-19 was a tipping point during which social relationships between Muslim and Hindu people were damaged.

For Nayak, real-world harm is subjective. Moreover, content creators capitalise on news cycles just for clicks, further worsening the impacts of false news.

‘Hustle for money’

While there’s an assumption that misinformation is often shared by innocent users, or disinformation is created by those intending to harm, Copeland calls attention to a third category.

“There’s a big category of harmful content which is not intended to harm necessarily, but just intending to generate engagement,” he says.

This is financially motivated disinformation where content creators are trying to get clicks, “and ultimately through clicks trying to monetise their content,” he says. Such creators post around emotive issues such as politics or politicised issues, including health and climate.

“It’s an effective way to get clicks and it can be very profitable,” Copeland explains.

For example, an Islamic cleric in Nigeria – in the Hausa language on a YouTube video – claimed the coronavirus was a Western agenda of depopulation. Each video amassed over 45,000 views between 2021 and 2022 before being flagged in a report.

For a channel monetising its content, traffic and engagement profits are estimated at up to US$29 per 1,000 views. A social media content influencer, who didn’t wish to be named, told SciDev.Net that the “hustle for the money is real”, and most creators will put out anything to gain traction and revenue.

Regional moderation ‘failed’

At one point, platforms including Facebook, YouTube and TikTok had elicited the services of regional content moderators, but due to disparity in labour pay of local and international staff, including other professional disagreements, the process failed, says Ajakaiye of FactsMatterNG.

This, coupled with lax or non-existent internet regulations in countries in the global South, has given rise to many synthetic media, such as deep fake videos or manipulated audio, she says.

“It’s also something that they’re not really considering,” concludes Ajakaiye. She says platforms just “bypass” misinformation in local languages “feeling it’s not important and it’s not something they really want to dedicate resources to”.

In a statement to SciDev.Net, a YouTube spokesperson said that the platform has clear guidelines on misinformation, including specifically on medical misinformation.

“In 2022, globally, we have removed more than 140,000 videos for violating the vaccine provisions of our Covid-19 misinformation policy. These provisions took effect in October 2020,” the statement said.

It added that other forms of misinformation are routinely taken down and in the second quarter of 2023 YouTube removed more than 78,000 videos for violating these policies.

In 2021, the platform provided Full Fact, an independent fact-checking company, with a US$2 million grant and expertise to create AI tools for fact-checking.

YouTube says this technology is now aiding fact-checking efforts in South Africa, Nigeria, and Kenya.

SciDev.Net also reached out to Meta, TikTok and Telegram for a statement but had not received a response by the time of publication.

As such, it is unclear how much resources these platforms have dedicated to combating misinformation in lesser-spoken languages in Nigeria and India or non-European languages, the number of those languages that it can detect using AI, or what would be classified as “real-world harm”.

The story was first published on SciDev.Net. Mahima Jain from India and Jennifer Ugwa from Nigeria are part of the Population Reference Bureau's Public Health Reporting Corps.

Misinformation on vaccines, farmers and minorities: India’s fact-checkers had a busy 2021

Misinformation on vaccines, farmers and minorities: India’s fact-checkers had a busy 2021